Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

0:02

This is the sound of turning ideas into

0:04

software. This is the sound of engineering

0:07

and passion.

0:09

Work. Work more. Work

0:11

harder. Experiment. Build.

0:14

Break. And build again. Write

0:17

code. Improve it. Job done.

0:21

Celebrate. Insurance.

0:23

Finance. Retail. Defense.

0:26

Robotics. Energy. Amethyx.

0:30

Welcome back to another episode of Data Science at Home

0:32

podcast. I'm Francesco, podcasting

0:34

from the usual office of Amethyx Technologies

0:37

based in Belgium. Today I want

0:39

to speak about special chips.

0:42

In fact, the chips that are executing

0:46

all the things that we know about AI, from

0:49

chat GPT to the large language models

0:51

to all the other neural networks that we

0:53

are running on our machines or in the cloud,

0:56

well, there is someone that is actually executing

0:59

that, or something

1:01

that is actually executing that, and that something

1:04

is, of course, your chip.

1:07

So there has been and still very

1:09

active research when it comes to

1:12

designing chips that

1:14

are specialized for certain domains,

1:17

and, of course, AI

1:20

is one of those domains for which

1:22

chip makers

1:23

have been spending an enormous

1:26

amount of resources and time and effort in

1:28

order to optimize or

1:31

improve as much as they could the

1:34

performance of these chips that

1:37

are executing special applications like, indeed, neural networks. So

1:41

in this episode, I would like to give kind

1:43

of a general knowledge about

1:46

what is an AI chip,

1:48

how does it work, why it is important,

1:51

and, of course, what should we be expecting

1:53

in the next few years from this

1:55

field. So the idea

1:58

of specializing...

2:00

or designing chips that are specialized

2:02

for a particular sector is not

2:05

new, of course. We have seen this in the history

2:07

of computing for many

2:09

special applications, especially from

2:11

military and defense, but also healthcare

2:14

in which we have always had, you

2:16

know, special devices that

2:19

are optimized to execute

2:22

one particular task. So this

2:24

is not what we are usually referring to

2:26

as general purpose computing, which is

2:29

the computers that we find in the shops

2:31

and we purchase every

2:34

year, probably. So there

2:37

is also, for example, in gaming, that's

2:40

also another special domain

2:43

for which special chips have to

2:45

be designed for that task. And here

2:47

I can think of all the consoles that we know from

2:51

Sony PlayStation to Microsoft

2:53

Xbox and many, many others out there that

2:56

are indeed, you know, these are devices

2:59

that have particular chips specifically

3:02

designed to run,

3:04

of course, video games. So before speaking

3:07

about AI chips

3:09

and what's going on in that market

3:12

and what we should be expecting, I think we should

3:14

all know about semiconductors

3:17

and transistors. What are these things?

3:19

Because these are basically

3:21

the core concepts that

3:24

one has to understand before moving on.

3:27

So what is a semiconductor? A semiconductor is

3:29

a special material with

3:31

some kind of electrical conductivity.

3:34

That's why it's called a semiconductor.

3:37

And it's not working as a conductor,

3:39

but as a semiconductor because it

3:42

can switch between being conductive and

3:45

insulative in different circumstances.

3:47

So a semiconductor can

3:50

be conductive and not conductive

3:52

under certain constraints,

3:55

right? While a conductor cannot

3:58

have that flexibility. So if

4:00

you take copper for example, that's a conductor

4:03

or gold usually. These are

4:05

special conductors that cannot really

4:08

choose not to be conducted

4:10

anymore. Well, a semiconductor has this kind

4:12

of special power. The other

4:15

concept is the concept of transistor.

4:17

And when I first, you

4:20

know, when the concept of transistor

4:23

was first explained to me many,

4:25

many years ago at university, I

4:28

found it quite weird

4:31

because, to say the least, because

4:34

it's a device that

4:37

can, you know, switch between a non

4:39

and off state representing one

4:41

and zero. You know, we all know the binary system

4:44

of computing. And essentially, transistors,

4:47

you know, generally speaking, include an

4:49

insulator, usually referred

4:51

to as oxide, between a gate

4:54

and a semiconductor channel, which is

4:56

usually silicon, and the gate is usually the

4:58

conductive metal or a conductive

5:00

material. And essentially what happens

5:02

is that the semiconductor channel

5:05

connects a source and a drain.

5:08

And so what happens is that when a voltage,

5:10

for example, an electric field is applied

5:13

to the gate, the channel is put in

5:15

an on state so that the current

5:17

can flow between the source and the drain.

5:20

Well, when the voltage is not applied, the

5:22

channel is put in an off state such

5:24

that the current cannot flow between

5:26

the source and the drain. And so you have enough transistor.

5:29

So what the transistor does essentially is converting

5:34

voltages or low

5:36

level voltage and high level voltage into

5:39

on and off, right? And essentially

5:42

on and off means one and zero, which

5:44

we all know are, you

5:47

know, the core of any

5:49

computing system out there, at least binary compute.

5:52

These transistors, as many

5:54

of you know, probably, are

5:57

the basic components of the

5:59

number one... one ingredient of any chip

6:02

that you purchase out there. From

6:04

the general purpose chips to the special

6:06

chips, they're all made of transistors.

6:09

The difference is how these transistors

6:11

are connected with each other, and of

6:13

course how many of these transistors are

6:16

present on a single chip. And today

6:18

we are speaking about several billion of

6:21

these transistors on a single chip, which

6:24

makes it amazing because the level of complexity

6:27

that you can reach

6:30

with, let's say, 10, 20 billion

6:33

transistors is incredible. Now, of

6:35

course, all these transistors into

6:38

one chip, they increase

6:40

the so-called density of the chip, and

6:43

also use energy, more

6:45

or less energy. So there is

6:48

no, so one can, it's

6:50

tended to increase the number of transistors

6:53

indefinitely in a naive

6:55

way, but of course there are

6:58

limitations that there are limits that

7:00

you immediately hit. For example,

7:03

you cannot have a chip with a certain

7:05

density, or you

7:07

would reach, for example, very

7:10

high temperatures or very

7:12

high power consumption, for which that

7:14

chip becomes probably inefficient

7:17

and definitely not useful. Now,

7:19

what we have observed, especially

7:21

in the last 20 years, and more

7:24

particularly in the last 10 years, with

7:26

the advent of AI technology, but

7:28

of course this is a trend that is going

7:31

on since the 60s and 70s for sure, is

7:33

that chip manufacturers have tried

7:36

to produce, well,

7:39

design first and then produce more

7:41

and more efficient chips. And

7:44

with the advent of AI technology as

7:48

a concept, and so in particular with the advent

7:50

of neural networks, which

7:52

is a very specific algorithm

7:55

to execute on a chip, we have

7:57

seen much more interest in... much

8:00

more interest in, you know, from the chip

8:02

makers into producing

8:05

specialized chips only for neural

8:07

networks. Of course we have seen this trend

8:09

already happening with video

8:12

games, which is also another particular

8:15

algorithm or family of algorithms that

8:18

you can run on your machine. I've

8:20

seen, for example, the advent of

8:23

GPUs, so-called graphic processing units,

8:26

that have been sold

8:28

together with the CPU,

8:31

which is the central processing unit, that

8:33

is more designed towards

8:36

general purpose processing and so general

8:38

purpose compute. So

8:41

when we speak about AI chips, we

8:43

are speaking about several families

8:47

of chips, in fact. It's not just one.

8:49

So of course we all know the GPU, NVIDIA

8:53

and Radeon, and all the things that we buy

8:56

to play video games, at least that's

8:58

what I do. But essentially

9:00

there are many more families, and in particular

9:03

two more specifically

9:05

designed for AI,

9:08

which is ASICs and FPGA.

9:11

So FPGA is something very

9:13

fascinating concept. FPGA

9:16

stands for Field Programmable Gate Arrays,

9:19

while ASICs stands for Application-Specific

9:22

Integrated Circuits. The acronyms

9:25

should already tell you what

9:27

they are, because with an

9:29

FPGA you essentially can, you know, the field

9:32

programmable gate, so it means that you

9:34

can program your chip. In fact, you can,

9:37

let's say, decide and define the

9:39

connections of how the transistors are

9:42

connected internally for your particular

9:44

application. So you literally

9:47

burn, indeed it's called like

9:49

that in the jargon, you burn FPGA,

9:52

that means you set up the

9:54

internal wiring of how

9:57

the sub-modules of this chip will

9:59

be connected for your particular application. And

10:02

when you change the application, in

10:04

fact, when you change the algorithm that that

10:06

particular chip or FPGA will

10:09

execute, well, you just reprogram

10:12

and you optimize your design,

10:14

the design of the chip, so that it will,

10:16

you know, better be accommodating

10:20

that new algorithm. As

10:22

you can see, FPGA are super powerful

10:24

because imagine it's

10:27

not exactly like this, but imagine

10:29

you could design

10:32

your own CPU or your own chip

10:35

at your own will for any algorithm,

10:37

more or less any algorithm. I remember

10:40

at university, we were playing

10:42

with, you know, building a chip

10:44

or designing a chip that would implement

10:47

the decoding and decoding of MP3s

10:51

or AUG-VORBIS, that was

10:53

another format, open

10:55

source format, for playing music and

10:58

compress, pretty much similar

11:00

to MP3, MPEG

11:02

layer 3. And the idea

11:05

there was like, okay, I'm going to design this

11:07

algorithm, it works like this. Now, which

11:09

chip would execute this algorithm

11:11

in the most optimized way, and we used

11:14

FPGAs that we wired accordingly.

11:16

So we had so-called EDA, which is,

11:19

I will speak about that later,

11:21

it's essentially a computer-aided

11:23

design CAD, that

11:27

allow you to, you know, design the chip on

11:30

paper or on screen first, and then

11:32

burn it. And burn it means

11:35

finalizing your design into

11:38

the chip so that the chip could run that particular

11:41

algorithm the fastest possible way

11:43

or in the most efficient possible way, for

11:46

example, using leafed

11:49

electric power and so on and so

11:51

forth. The other

11:54

family of circuits is

11:56

ASIX, which stands for application-specific

11:59

integrated circuits. And these are less

12:01

flexible than FPGA, but

12:03

they can be extremely

12:06

fast and extremely optimized. So

12:08

usually ASICs are designed

12:12

for a particular algorithm and

12:14

they will keep executing that algorithm until

12:18

you just change everything and you start

12:20

from scratch. So that's why they're

12:22

not as flexible as an FPGA, but

12:24

they can be much, much faster. Now

12:27

the problem of ASICs is that

12:29

they would not have an interesting

12:33

market life in the sense that when

12:35

something changes at the software level,

12:39

it's almost impossible to maintain the

12:41

same or to guarantee the same

12:43

level of optimization on the chip with an

12:45

ASIC because ASIC, you cannot

12:48

reprogram them. They just die. They

12:50

just like that until the rest

12:52

of

12:53

daylight. Now there are several ways

12:56

of course to increase the

12:59

efficiency of an AI chip. And I'll

13:02

try to explore some of them in

13:04

this episode. We will speak about parallel

13:07

compute. We will speak about speeding

13:10

up memory access, just giving

13:12

some time because this is a massive

13:15

topic, super

13:18

nice, very fascinating. Also

13:21

a lot of skills are involved. There is a material

13:24

science, there is electronics, there is

13:26

computer science. A

13:28

lot of skills are required

13:31

in order to tackle

13:33

this particular problem. So

13:36

why AI chips are necessary

13:39

for AI? Well, we

13:41

all know that AI chips are

13:43

usually tens or even thousands of times

13:45

faster and more efficient than

13:47

CPUs. And even

13:49

if you purchase probably the latest

13:52

or the top you can get now

13:55

from NVIDIA cards, graphic cards, you

13:58

will notice... an

14:01

improvement, a dramatic improvement with

14:03

respect to the chips of a few

14:05

years ago, even less than two. And

14:09

this is because, of course, these chips are

14:11

designed particularly for special

14:14

games or for special algorithms

14:17

like, as I said, deep neural networks. If

14:20

you make a comparison with the poor,

14:23

the good old CPU, or I would say

14:25

the poor CPU, usually

14:27

you will find it's

14:30

even more than dramatic, the difference.

14:33

If you have trained

14:35

a neural network on CPU, you

14:38

can do this by taking, for example,

14:40

PyTorch library if you're using Python,

14:43

and just decide to

14:46

train a neural network on CPU.

14:48

And then if you are lucky enough to have a GPU

14:51

at home, or you can just rent

14:53

one from the cloud, you can run the

14:55

same algorithm using PyTorch.

14:57

You just enable, there are ways to do that very,

15:00

you know, one single line of code in

15:02

which you are telling PyTorch, hey,

15:05

this computation, please run it

15:07

on the GPU, because look, surprise,

15:10

I have a GPU now. So if exactly

15:12

the same algorithm is run on a GPU,

15:16

by algorithm, I mean a neural network, you

15:18

will see probably 10, 20, sometimes

15:20

even 100 times faster during training. So you

15:25

would train a network in one hour

15:28

when that network could be trained usually in hundreds

15:31

of hours on a single CPU. So

15:34

that's how important AI

15:37

chips are for AI. The problem

15:39

is that according to Moore's

15:41

law, that tells

15:44

us about the number of transistors

15:46

in a chip doubling about every

15:48

two years. Apparently,

15:51

this is kind of not happening anymore. And

15:53

so it means that we are kind

15:56

of reaching a limit

15:59

that Moore didn't...

15:59

really

16:01

predict, which makes sense

16:03

because more is a law of

16:05

many, many years ago from

16:07

the sixties, in fact. And of course,

16:10

you know, it was quite hard back in

16:12

the days to think where we would have been or

16:14

where we are today. When

16:17

it comes to CPU speed, that's

16:19

also something that we have improved a lot.

16:21

Well, we, I mean, researchers

16:23

and manufacturers to the point that now

16:26

we are also reaching another limit

16:28

there. And all these means that we

16:31

have to find something else in order

16:33

to, let's say, keep enjoying

16:35

this or keep experiencing this

16:38

constant improvement. And the problem

16:41

of all this, of course, can be conducted

16:43

back to the transistor because as I said, the

16:46

transistor is the number one ingredient

16:49

of your GPU, hard

16:51

disk, SSD, whatever, they are

16:53

all based on transistors. And

16:56

so as transistors have shrunk to

16:58

sizes that are a

17:00

few atoms thick,

17:04

they're actually approaching a fundamental

17:08

lower limit on size. So that's kind

17:10

of the lower bound

17:12

that we can physically reach,

17:15

at least with this material. Or we

17:18

find new materials that have different

17:20

properties, or if

17:22

we want to keep using the same material we

17:24

are using now, like silicon, for example,

17:26

and of course, I don't get into the details,

17:29

I personally don't even have the right

17:32

background to speak about what material

17:34

exactly are manufacturers

17:36

using. But there are,

17:39

you know, when you use a certain type of material,

17:42

you are signing a contract with that

17:44

material. That is, you

17:46

cannot break the properties of that, you

17:49

know, the low level properties of that material.

17:52

And so you cannot go below

17:55

certain, for example, sizes.

17:58

Remember, we are, I think we are the now

18:00

with 5 nanometer technology and

18:03

if you want to go beyond the 3 nanometer

18:06

challenge, apparently that would not

18:08

be possible with this material. So

18:11

we need to find new materials. Or

18:14

we can,

18:16

let's say, experience the same constant

18:19

improvement on chip by looking

18:22

at something else and not just the chip itself

18:25

but looking at whatever happens around

18:27

the chip. And what is happening around the chip

18:29

is so-called parallelism. So

18:32

more transistors could theoretically

18:35

enable a chip to include many

18:39

more circuits to perform larger

18:42

and larger numbers of calculations in

18:44

parallel. And so the idea

18:46

here is that if you want

18:48

to experience a constant speedup,

18:52

well this speedup should come from parallelism

18:55

and not just by increasing number

18:58

of transistors. Because as we said, this

19:00

can be possible until a certain limit

19:03

after which it

19:05

no longer makes sense to stuff

19:07

transistors on the same chip. What

19:09

makes sense, and that's what manufacturers

19:12

have in fact already noticed,

19:15

is that major speedups can

19:17

come from parallelism. And parallelism

19:19

in turn can be performed

19:22

by redesigning chip

19:24

in a way that of course

19:26

they can compute things at the same

19:28

time so that we can have on the same

19:30

chip parallel computation

19:33

going on. And also at the

19:35

same time limiting or minimizing

19:38

the number of so-called serial

19:40

computations which are the computations

19:42

that have to be performed after another

19:45

one. So a typical serial

19:47

computation cannot start if another

19:49

serial computation hasn't been completed

19:52

before. And this

19:54

is where you're kind of stuck

19:57

because if you increase number of serial

19:59

computations you

20:01

will not experience, for example, the

20:03

multi-multi-course that you have on

20:06

your desk because, well,

20:09

zero computations. Now, when it

20:11

comes to deep learning, of course, we all know

20:13

that the typical design

20:17

of a deep learning algorithm is

20:19

composed of two essential steps. One

20:22

is training and the other

20:24

is inference. So, during training, we all know

20:26

what happens, you know, from an algorithm

20:28

perspective, stochastic

20:30

reason descent and loss

20:33

minimization, all the stuff that we have discussed

20:35

on this show many, many times. During

20:38

training, from a low-level perspective,

20:40

one wants to experience so-called

20:43

data parallelism, while in

20:46

inference,

20:46

often this doesn't happen. So,

20:49

what is data parallelism? Data parallelism means

20:52

that

20:53

you can have many pieces of data

20:55

in parallel that are processed

20:57

in parallel. And that's something

21:00

that indeed can be done, and usually is done,

21:03

even from your GPU, by

21:05

your GPU, that,

21:08

you know, batches your

21:10

relatively large input

21:13

training data and operates

21:16

on each batch in parallel. And

21:18

why is that possible? It's because the

21:21

operations that can be performed,

21:23

that are performed on a single batch, do

21:26

not depend on anything else. And so,

21:29

independent operations means that they are

21:31

highly parallelizable. That's the best

21:34

you can get from an algorithm, you know, having

21:36

to repeat computation,

21:39

exactly the same computation, over

21:41

and over again, on different data. And this means

21:43

that I can just chunk my data and

21:47

give it to different modules,

21:49

which will, in parallel, perform the

21:51

computation without interdependence.

21:56

But there is the second step, which is inference.

22:00

inference, you no longer have

22:02

or you might not have this

22:04

full data parallelism. And

22:07

so when we think about AI chips, we

22:09

have to think about, okay, AI chips

22:11

to optimize what part of

22:14

the computation? Do you want to optimize

22:16

it in, during training, or

22:18

do you want to optimize it during inference? Because

22:21

this ideally might be two

22:23

completely different chips. And

22:26

so that's where the challenge

22:28

comes with AI. You

22:30

cannot usually accommodate

22:33

the requirements and the needs of

22:35

the researcher who wants to train

22:38

as fast as possible because they need to iterate

22:40

over their algorithm as quickly as possible.

22:43

Remember, they have research projects and they

22:45

have to, you know, this is not exact

22:47

science, this is kind of a trial and

22:49

error procedure, right?

22:53

Using the topology of a network, of

22:55

a neural network, hundreds or thousands

22:57

or millions of times if you have automated tools and

23:00

try to explore, for example, what

23:02

is the topology that better performs that

23:04

particular task. So

23:09

the type of optimization that, let's

23:11

say, the AI researcher would ask

23:14

for is probably not the same

23:17

optimization that a researcher

23:21

who does only inference is

23:23

asking for. Because for example, for inference,

23:26

you would have, you would just want to have

23:28

the fastest possible multiplications

23:31

when it comes to neural networks. That

23:33

is the multiplication of the input

23:36

data to the weight

23:38

matrix and all the matrices

23:40

of the inner layers until the output.

23:43

Think about an autonomous vehicle that, of course, has

23:45

to, you know, make a prediction

23:49

sub-millisecond because otherwise the car can crash.

23:51

Now, generally speaking, inference chips

23:54

require less research

23:56

breakthrough than training

23:58

chips. because they require

24:00

optimization for fewer computations

24:03

than training chips. And again,

24:05

this is kind of a general rule,

24:09

even though it's not really a rule. Now,

24:12

ASICs require fewer

24:14

research breakthroughs than GPUs and FPGAs,

24:17

because ASICs are narrowly

24:19

optimized for specific

24:22

algorithms. Design engineers consider

24:24

far fewer variables. So they

24:26

don't want to build or design

24:29

something that is as general

24:31

as possible, because the

24:33

ASIC is very optimized for specific

24:36

algorithms. So

24:38

what I'm trying to say is that designing

24:41

an ASIC is kind of easier,

24:43

let's say, if I can say that, with

24:46

respect to designing a GPU or an FPGA,

24:48

because the ASIC has to accommodate only that

24:51

task and that task only. Now,

24:53

when it comes to designing a circuit

24:56

meant for only one calculation,

25:00

of course, an engineer can translate the calculation

25:02

into a circuit that is optimized for

25:05

that calculation. And that's usually what

25:08

designers do with the computer-aided

25:11

design. It's a special software that

25:13

allows you to design a circuit. Another

25:16

form of optimization goes

25:18

under the name of low precision computing.

25:21

And we have discussed this a

25:23

couple of times on this show when we spoke about, for

25:26

example, quantization methods for neural networks.

25:29

So low precision computing essentially

25:31

sacrifices numerical accuracy

25:34

for speed and efficiency. And

25:37

it's based on one very simple

25:39

observation, that is, if you

25:42

don't need extreme

25:44

accuracy in your calculation, it

25:47

means that I can utilize

25:49

much less transistors for that

25:51

particular computation. And so

25:53

you can think of, if you have an

25:56

8-bit chip, the number

25:58

of possible or 8-bit data

26:01

or variable, the number of possible

26:03

values of that 8-bit variable is

26:05

of course 256. But

26:09

if you have a 16-bit chip, it means that

26:12

the single value can be represented in 65,536,000 different

26:21

values. But if you have a 32-bit chip,

26:24

it means that you can represent 4.3 billion

26:26

values. And if a

26:28

64-bit, of course, we can represent 18 quintillion, 1.8

26:32

times 10 to

26:34

the power of 19, right, potential values.

26:36

So this means

26:39

that higher-bit data types can represent

26:44

a wider range of numbers and

26:47

of course a larger set of integers

26:49

or a larger set of floating point numbers

26:53

and so on and so forth, which means that the computation

26:56

can be much more precise. Now, if

26:58

you are shooting a rocket

27:01

that does, that requires calculations

27:05

at a very high precision, of

27:07

course you want to use 64, 128, probably even higher

27:12

machines. So 256-bit special processors in military and defense

27:14

are not an exception. In

27:20

fact, quite the rule. But if

27:22

you're running, for example, a neural network

27:25

for a task that is

27:28

a, let's say, a probabilistic method

27:30

for which 95% and 94.9% does make a big

27:33

difference for

27:37

the task at hand or even

27:40

less like 90%

27:41

and 80% probability of something

27:43

does make

27:45

a

27:47

big difference for your particular use case, probably

27:50

you would like to, let's say, sacrifice

27:54

a bit the accuracy and

27:56

reduce therefore the number of bits of data. for

28:00

each single computation, which means

28:02

reducing, of course, the number of transistors that

28:04

will perform that computation for you.

28:07

And so by doing this, you know, so-called

28:10

low precision computing, you can save

28:12

energy, you can save space, or

28:14

you can just utilize those transistors for

28:16

something else. Another

28:20

form of optimization is

28:22

memory optimization. Memory optimization is

28:24

also very important because now AI

28:27

chips include sufficient memory

28:29

to store even the entire AI

28:31

algorithm on chip. And

28:34

this is essential because the

28:36

alternative to this is, you

28:39

know, using an off-chip

28:41

memory, and of course connecting to

28:43

the off-chip memory, communicating and sharing

28:46

data, transfer data back

28:49

and forth from the chip that

28:51

is performing the compute to

28:53

the memory that is storing the

28:55

values, right? Or storing

28:58

the algorithm. So there is this communication

29:00

that must happen between the chip and

29:03

the off-chip memory. And

29:06

that not only requires time,

29:09

but also requires energy. And

29:11

because you have a channel that

29:13

you have, or a bus usually if you use a von

29:16

Neumann architecture, that

29:19

you need to keep busy with bits

29:21

and bytes in order to share

29:25

data from the memory to the chip. And

29:28

the other way around. Now,

29:30

this means that you need energy. This means that

29:32

you are dissipating heat and

29:35

all the consequences of that. Usually

29:38

AI chips have, as I said, a

29:41

sufficient amount of memory on chip so

29:44

you can skip that communication off-chip

29:47

memory. Last but not least,

29:49

domain specific languages, or so-called

29:52

DSLs. We have

29:54

seen a lot of Python recently

29:56

in AI, which is extremely

29:58

wrong now. Now, Python

30:01

is good for research, but

30:03

it's definitely not good for production.

30:06

We all know this, you know this, and

30:09

if you don't, just do it. Because

30:12

programming languages that are not optimized

30:15

for that particular task should not

30:17

be used, period. That's

30:20

why C will never die, in my

30:22

opinion, and probably new languages like

30:24

Rust will eventually take

30:27

over C from another perspective

30:29

for more safety

30:31

and all the other properties of that language. But

30:34

what I mean is that low-level languages are

30:37

the language of AI, should

30:39

be the languages of AI. I'm not the

30:41

first who says this. I think Elon

30:43

Musk said that, even though I don't like that

30:45

guy much, but yeah,

30:48

sometimes he says things that

30:49

make sense. Of course, as

30:51

I mentioned before, there are also libraries

30:53

like PyTorch, and these are code

30:56

libraries that are written

30:59

in low-level languages, I think C and

31:01

C++ for PyTorch. But

31:03

they have only bindings to

31:06

Python, which means that one can

31:09

use Python, but behind

31:11

the curtain, in fact, they are calling a precompiled

31:16

C or Rust compiled

31:19

version of the application.

31:22

And I'm just using Python to

31:24

call that piece of code, machine

31:27

code, in fact. Of

31:29

course, this episode could never be

31:32

exhaustive when it comes to describing

31:34

AI chips and what happens at

31:36

the very low level of artificial

31:39

intelligence, which is, indeed, chipmaker stuff.

31:42

But I hope I gave you a very

31:46

brief introduction to what is

31:48

running in your box there that

31:51

is called computer. Thank you so much for

31:53

listening. I'll speak with you next time.

31:56

on

32:00

iTunes, Stitcher, or Podbean to get

32:02

new fresh episodes. For more, please follow

32:04

us on Instagram, Twitter, and Facebook, or

32:07

visit our website at datascienceathome.com.

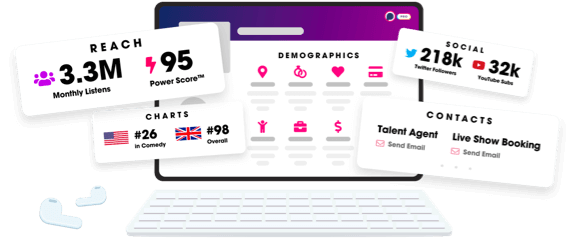

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us